And now the pretty graphs…

Below are some pretty graphs that puts no-caching to some serious shame.

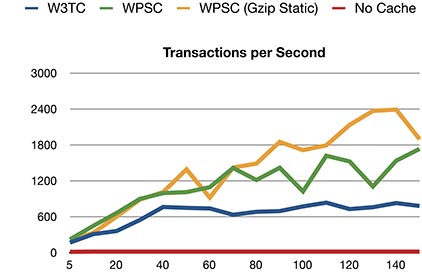

Transactions per Second

The more visitors you can serve at once the better your exposure will be when you have a traffic onslaught. Basically if it takes 5 seconds for all your visitors to even get a page, it is going to be very slow by the time you have more than a couple people accessing the site at once. Some may even choose to simply leave the site at that point.

And here’s the data for each test run (decimal values shaved off), **the bolded numbers indicate a test that didn’t make it for the full 5 minute duration. The only one that didn’t was the no-caching scenario, which at 140 and 150 concurrent users only lasted 26 and 12 seconds before stopping from too many failures.

| Concurrent Browsers | W3 Total Cache | WP Super Cache | WPSC w/ Gzip Pre compression | No Caching |

|---|---|---|---|---|

| 5 | 167 | 220 | 206 | 10 |

| 10 | 307 | 445 | 330 | 14 |

| 20 | 359 | 660 | 601 | 14 |

| 30 | 544 | 896 | 883 | 14 |

| 40 | 760 | 992 | 1010 | 14 |

| 50 | 745 | 1008 | 1388 | 14 |

| 60 | 736 | 1091 | 917 | 14 |

| 70 | 630 | 1412 | 1418 | 14 |

| 80 | 679 | 1211 | 1485 | 14 |

| 90 | 691 | 1415 | 1848 | 14 |

| 100 | 769 | 1021 | 1710 | 14 |

| 110 | 832 | 1616 | 1791 | 14 |

| 120 | 725 | 1522 | 2132 | 14 |

| 130 | 756 | 1103 | 2365 | 14 |

| 140 | 826 | 1533 | 2388 | 14** |

| 150 | 777 | 1730 | 1894 | 14** |

As you can see above, caching with nearly any method gives you quite a significant boost over not using caching at all. Without caching, the number of transactions per second remains about the same all the way from 5 concurrent browser to 150 concurrent browsers. But at 140 and 150, the no caching scenario ended the test early by having too many failures (ie: both lasted no more than 20 or so seconds).

Speaking of failures…

Failed Requests

Here you’ll see that there were no failures except in three cases. The first one being 372 failures during the 150 browser load for W3 Total Cache… out of 233,131 successful transactions. The other two are for the no-caching options, which only lasted 29 seconds (140) and 12 seconds (150) before ending the test from too many failures. The No-cache option only had 416 and 172 successful transactions, while at the same time both having about a thousand failed transactions in the short amount of time the test ran.

| Concurrent Browsers | W3 Total Cache | WP Super Cache | WPSC w/ Gzip Pre compression | No Caching |

|---|---|---|---|---|

| 5 | 0 | 0 | 0 | 0 |

| 10 | 0 | 0 | 0 | 0 |

| 20 | 0 | 0 | 0 | 0 |

| 30 | 0 | 0 | 0 | 0 |

| 40 | 0 | 0 | 0 | 0 |

| 50 | 0 | 0 | 0 | 0 |

| 60 | 0 | 0 | 0 | 0 |

| 70 | 0 | 0 | 0 | 0 |

| 80 | 0 | 0 | 0 | 0 |

| 90 | 0 | 0 | 0 | 0 |

| 100 | 0 | 0 | 0 | 0 |

| 110 | 0 | 0 | 0 | 0 |

| 120 | 0 | 0 | 0 | 0 |

| 130 | 0 | 0 | 0 | 0 |

| 140 | 0 | 0 | 0 | 1026* (416 Successful) |

| 150 | 372 (233,131 Successful) | 0 | 0 | 1032* (172 Successful) |

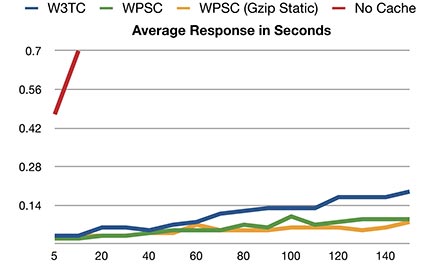

Response Time

As you can see, without caching, not only is the number of requests served per second low, so is the tolerance to failure. However, how quickly did each scenario respond to the browser’s request on average? Below is the data for the average response time in seconds.

| Concurrent Browsers | W3 Total Cache | WP Super Cache | WPSC w/ Gzip Pre compression | No Caching |

|---|---|---|---|---|

| 5 | 0.03 | 0.02 | 0.02 | 0.47 |

| 10 | 0.03 | 0.02 | 0.03 | 0.70 |

| 20 | 0.06 | 0.03 | 0.03 | 1.39 |

| 30 | 0.06 | 0.03 | 0.03 | 2.09 |

| 40 | 0.05 | 0.04 | 0.04 | 2.76 |

| 50 | 0.07 | 0.05 | 0.04 | 3.43 |

| 60 | 0.08 | 0.05 | 0.07 | 4.20 |

| 70 | 0.11 | 0.05 | 0.05 | 4.90 |

| 80 | 0.12 | 0.07 | 0.05 | 5.49 |

| 90 | 0.13 | 0.06 | 0.05 | 6.25 |

| 100 | 0.13 | 0.10 | 0.06 | 6.88 |

| 110 | 0.13 | 0.07 | 0.06 | 7.61 |

| 120 | 0.17 | 0.08 | 0.06 | 8.16 |

| 130 | 0.17 | 0.09 | 0.06 | 8.90 |

| 140 | 0.17 | 0.09 | 0.06 | 8.26 |

| 150 | 0.19 | 0.09 | 0.08 | 6.19 |

The first three scenarios kept the average response time under 0.20 seconds. Without caching the response time started to suffer greatly quickly. This is basically how long it takes for the server to respond to a browser’s request. For example with no caching in the 20 concurrent browser test, on average it would take up to 1.39 seconds before the server even responds with data.

Conclusion

Be it file-based caching, memcache, PHP accelerators, or other methods that tickle your fancy, caching is important for WordPress. Without it, not only does your server suffer under higher loads, but so do your visitors. So for that sake, consider installing W3 Total Cache, WP Super Cache or one of the many other caching plugins available for WordPress.

On the next page are the NGinx server configurations for each scenario.

In the memcached configuration, the obvious difference is that PHP is being accessed every single request to the site which has the additional overhead of PHP checking the request, then retrieving the keyed item from memcached and serving it. In the file-based configuration (which both W3 total cache, and WP supercache can be configured with) , Nginx is completely by-passing PHP all together and serving static content directly from the disk.

As to why; first off I don’t get that much traffic and even if I do with the current configuration can still handle quite a bit. Also with this configuration I don’t have to modify nginx with any excessive rewrite rules to check for disk-based cache, and publishing new content is easily refreshed with the memcache setup. I used to use wp-super-cache with preloaded cache almost strictly which was fast indeed over very high load (which I almost never get), but it only caches files, it doesn’t improve the performance for users logged in, and I have to make sure to clear the disk cache when I make a change to the design or site.

Great article.

However I have following problem.

My WP setup is like that.

Currently I am on shared hosting with WP + W3 Total cache and during peak hours, my site is very slow. That is mainly because I have a huge traffic from Google.

My webstie caches plenty of keywords with AskApache Google 404 and Redirection.

What happens is that traffic from Google goes to /search/what-ever-keywords dynamicly created everytime. And that is killing my system.

The problem is I have no idea how to help poor server and cache that kind of traffic.

Would you have any advice for that ?

Regards,

Peter

That’s a rather good question, especially considering you can’t easily cache random searches. I was looking into it, it seems to also be a common way of overloading a wordpress site.

The Nginx webserver does provide one feature that may help, called the Limit Request Module (http://wiki.nginx.org/HttpLimitReqModule)

Essentially you could have a location block like so (the line above goes somewhere in http block):

limit_req_zone $binary_remote_addr zone=one:10m rate=1r/s;

location /search { limit_req zone=one burst=5; rewrite ^ /index.php; }

Essentially what happens is that the location /search is limited to a rate of 1 request per second based on a visitor’s IP address. A burst of 5 mins that they have only 5 times that they can exceed this rate before they are hit with a 503 error response. Google for example see’s 503 as kind of a de-facto “Back off” response.

The rewrite is there since on wordpress there shouldn’t ever be an actual folder named search, and all search requests are going to /index.php anyways.

kbeezie thank you for your replay.

I think that this is not an issue here. What happens right now is that betwean 7pm till 9pm I am being strongly attacked from google … like 20-50 req/s

So I would probably need 8 cores or smth … which is super expensive … plus only required for some time during a day.

I need to look in to your limiting module, what would be great is that is someone searches to much, he should be redirected to main page, or specified page where he would see warning and not 503 error. That is big to drastic, I think.

What do you think, is that possible ?

By the way I learned that the rewrite line will actually act before the limiting had a chance to act. So have to use try_files $uri /index.php; instead which allows the limiting module to act before trying for files.

Far as google, 503 is the de-facto standard for “back off” to the google servers. You can however create a google webmaster account ( https://www.google.com/webmasters/tools/ ) , add your site, verify it, then set your crawl rate manually rather than google doing so automatically. This way you can have some control in preventing google from crawling your site too quickly.

More hardware isn’t always the key to improving your site. Far as an 8 core, even a 4 core (or 4 core + hyper threading) would be fine and not all that expensive unless you go with prices from places like rackspace and such (though expensive if you’re only used to VPS pricing).